A/B Testing for Landing Pages: Complete Guide to Increasing Conversions

Ready to fix your low conversion rate? This A/B testing for landing pages guide reveals proven strategies to optimize every element, from headlines to CTAs. Learn how to test smarter, convert better, and grow revenue from existing traffic.

Eddy Enoma

10/17/202515 min read

Proven Strategies For Testing Headlines, CTAs, and Copy That Turn Visitors into Customers

You've built a beautiful landing page. The design looks sharp, your offer is solid, and traffic is flowing in nicely. But here's the problem: visitors aren't converting. They're landing, looking around for a few seconds, and then bouncing right back to wherever they came from.

Sound familiar? You're not alone. The average landing page conversion rate hovers around 2.35%, according to recent industry benchmarks. That means for every 100 people who visit your page, roughly 97 of them leave without taking action. Those aren't great odds, especially when you're paying for that traffic. This is exactly why A/B testing for landing pages has become essential for any business serious about growth.

The truth is, most landing pages fail not because they're terrible, but because they're built on assumptions instead of evidence. You assume your headline is compelling. You assume people understand your value proposition. You assume that bright green button will make them click. But assumptions don't pay the bills. Data does.

That's where A/B testing comes in. Instead of guessing what works, you can actually prove it. And the results can be staggering. Companies that commit to systematic testing report conversion rate improvements anywhere from 20% to 300% over time. We're talking about the difference between struggling to hit your targets and consistently exceeding them, all from the same amount of traffic.

If you're ready to stop guessing and start optimizing based on real user behavior, professional A/B testing tools can help you set up experiments in minutes, even if you've never run a test before.

Understanding A/B Testing for Landing Pages Beyond the Buzzword

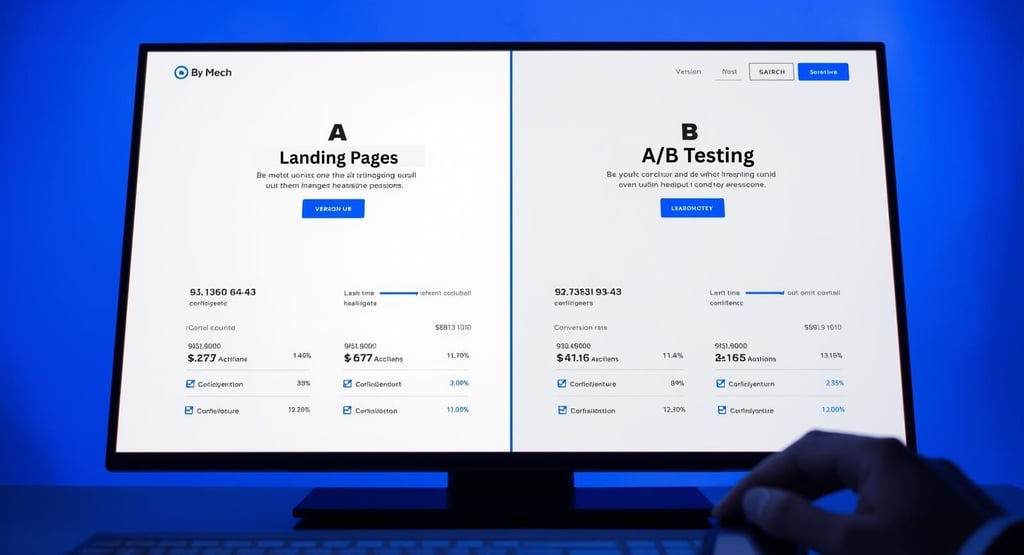

Let's cut through the jargon for a second. A/B testing is simply showing two different versions of your page to real visitors and measuring which one performs better. Version A might have a headline that says "Transform Your Marketing in 30 Days" while Version B says "Get More Customers Without Spending More." Half your traffic sees one, half sees the other, and you track which version drives more conversions.

The concept is straightforward, but the impact is profound. According to research from Invesp, businesses that use A/B testing tools are 60% more likely to see increases in conversions compared to those that don't test at all. That's not a small edge. That's the difference between growing and staying stuck.

But here's what most people get wrong about testing. They think it's about making wild guesses and hoping something sticks. In reality, effective testing is methodical. You start with a hypothesis based on user behavior or psychology, you design a test around that hypothesis, and then you let the data tell you what actually works. It's scientific, not scattershot.

The beauty of modern testing is that you don't need a PhD in statistics or a massive tech team to get started. Modern A/B testing platforms have made it possible for anyone with a website to run controlled experiments and get reliable results, often with just a few clicks and zero coding required.

The Real Cost of Not Testing

Every day you don't test is a day you're leaving money on the table. Let's do some quick math to make this concrete. Say you're running an e-commerce site that gets 10,000 visitors per month. Your current conversion rate is 2%, which means 200 sales. If your average order value is $50, that's $10,000 in monthly revenue.

Now imagine you run a series of tests and improve your conversion rate to just 2.5%. That's only a 0.5 percentage point increase, but it translates to 50 extra sales per month. At $50 per order, you've just added $2,500 to your monthly revenue, or $30,000 per year. And you did it without spending a single extra dollar on advertising.

Scale that thinking across multiple tests, multiple pages, and multiple months, and you start to see why companies like Booking.com run over 25,000 tests every year. They understand that small improvements compound into massive competitive advantages.

But it's not just about the money you're missing. It's about the insights you're not gaining. Every test teaches you something about your customers. What language resonates with them? What objections are holding them back? What proof points actually build trust? This knowledge becomes a strategic asset that informs not just your landing pages, but your entire marketing approach.

The good news? You can start capturing these insights today with the right testing infrastructure in place. Smart A/B testing software tracks not just which variation wins, but why it wins, giving you actionable intelligence you can apply across all your marketing.

Building Your Optimization Strategy From the Ground Up

The biggest mistake people make when they start testing is treating it like a random activity. They'll change their button color one week, tweak a headline the next, and then wonder why nothing seems to work. That's not testing. That's just tinkering.

Real optimization starts with understanding what you're trying to achieve. Is your goal to get more email signups? Increase demo requests? Drive direct purchases? Your entire testing strategy should flow from that single primary objective. Everything else is secondary.

Once you've nailed down your goal, you need to understand who you're optimizing for. A landing page that works for busy executives won't work for recent college graduates. A page that converts bargain hunters won't convert premium buyers. You need to know your audience well enough to craft messages and experiences that speak directly to their needs, fears, and desires.

This is where most people need to slow down and do some homework. Look at your analytics. Read support tickets. Talk to customers. What questions do they ask before buying? What hesitations do they express? What benefits do they care about most? The answers to these questions become the foundation for your testing hypotheses.

Identifying What's Actually Broken

Before you start changing things, you need to diagnose the real problems. Walk through your landing page with fresh eyes and ask yourself some hard questions. Is your value proposition immediately clear, or does someone need to read three paragraphs to understand what you're offering? Can a visitor figure out what action to take within five seconds, or is your page cluttered with competing calls to action?

Research from the Nielsen Norman Group shows that users typically leave web pages within 10 to 20 seconds unless you give them a compelling reason to stay. That means you have an incredibly short window to communicate value and build just enough trust to earn the next click.

Common friction points include headlines that are clever but vague, copy that focuses on features instead of benefits, calls to action that are passive or generic, and a complete lack of social proof. If your headline says "Welcome to Our Platform" instead of describing what the platform actually does for the user, you've already lost half your visitors.

Trust signals matter more than most people realize. A study by BrightLocal found that 79% of consumers trust online reviews as much as personal recommendations. If your landing page doesn't include testimonials, case studies, customer logos, or data points that demonstrate credibility, you're asking people to take a leap of faith. Most won't.

This is exactly why systematic testing matters so much. When you use reliable A/B testing tools, you can test different trust signals, proof points, and value propositions to discover exactly what makes your specific audience feel confident enough to convert.

Crafting Hypotheses That Actually Mean Something

A good hypothesis isn't just "let's make the button bigger." It's a specific, testable prediction about user behavior based on psychological principles or observed patterns. The format should be: If we change X to Y, then Z will happen because of this reason.

For example, a strong hypothesis might be: "If we change the headline from a feature-focused statement to a benefit-focused question, then we'll see a 15% increase in demo requests because visitors will immediately identify whether this product solves their problem."

See the difference? You're not just changing things randomly. You're making an informed prediction based on reasoning. This matters because even when tests don't produce winners, they produce learning. You'll understand not just what worked, but why it worked or didn't work.

The best hypotheses come from combining user research with established psychological principles. Things like loss aversion (people are more motivated to avoid losses than to achieve gains), social proof (we look to others to guide our decisions), and the principle of specificity (concrete claims are more credible than vague ones). When you ground your tests in these frameworks, your success rate goes up dramatically.

Ready to Start Testing? Don't waste another day guessing what might work. Get started with proven A/B testing software that helps you design better experiments, track results accurately, and turn insights into revenue. No technical expertise required.

Designing Tests That Give You Clear Answers

Here's where many people get tripped up. They want to test everything at once. New headline, new images, new copy, new button color, new form fields. Then, when conversions change, they have no idea which element made the difference. That's not a test. That's chaos.

The gold standard for most situations is a clean A/B test where you change one element and measure the impact. Want to test headlines? Keep everything else identical and only vary the headline. This gives you a clear cause-and-effect relationship. You'll know with certainty whether that headline change drove the improvement.

That said, there are times when multivariate testing makes sense, especially if you have high traffic volume and want to test multiple elements simultaneously. The key is having the right infrastructure to manage complex tests without getting overwhelmed by data.

Statistical significance is the key phrase here. You can't call a test after 50 visitors and declare a winner. You need enough data to be confident that the results aren't just random chance. Generally, you want at least 95% confidence and enough conversions per variation to make the math work. For most sites, this means running tests for at least one to two weeks and getting several hundred visitors per variation at a minimum.

The challenge is that calculating sample sizes, managing traffic splits, and interpreting statistical significance can get complicated fast. That's why purpose-built A/B testing platforms handle all the math for you, automatically calculating when you have enough data to call a winner and preventing common statistical errors that lead to false positives.

The Creative Work: What to Actually Test

Now we get to the fun part. What should you change? The short answer is: everything, eventually. But you need to prioritize based on potential impact.

Start with your headline. It's the first thing people see, and it determines whether they'll bother reading anything else. Test benefit-focused headlines against feature-focused ones. Test questions against statements. Test specificity against broad claims. A headline that says "Get 40% More Qualified Leads in 60 Days" will outperform "Improve Your Marketing" almost every time because it's specific, benefit-driven, and time-bound.

Your call-to-action comes next. The difference between "Submit" and "Get My Free Guide" can be substantial. One is passive and effort-focused. The other is active and benefit-focused. Test action verbs, test adding urgency, test emphasizing value over action. Small words, big impact.

Social proof deserves serious attention because it works. If you have customer testimonials, test different formats. Does a video testimonial outperform a text quote? Do specific results ("We increased revenue by 47%") beat general praise ("This product is amazing")? Do customer logos at the top of the page build trust faster than testimonials buried at the bottom? You won't know until you test.

The length and structure of your copy matter too. Some audiences want all the details before they'll convert. Others want to skim three bullet points and click. Test a long-form version with detailed explanations against a short-form version with just the essentials. The results might surprise you.

And here's something most people overlook: test your page load speed and mobile experience. Google research shows that 53% of mobile users abandon sites that take longer than three seconds to load. If your beautifully designed page takes six seconds to appear, you've already lost half your audience before they even see your headline.

Making Implementation Actually Happen

Theory is worthless without execution. Once you've designed your test, you need to actually set it up and run it properly. This is where having the right tools becomes critical. You need something that can serve different variations reliably, track conversions accurately, and give you clean data to analyze.

The technical setup doesn't have to be complicated. Most modern platforms let you target specific page elements using simple selectors, then replace the content with your test variations. You set your traffic split (usually 50/50 for A/B tests), define what counts as a conversion, and launch. The tool handles the rest.

Quality assurance matters more than people think. Before you push a test live, check it on multiple devices and browsers. Make sure your variations look right on mobile. Verify that your tracking is firing correctly. A broken test that gives you bad data is worse than no test at all because it leads you to wrong conclusions.

If you're not technical or don't have a developer on your team, look for no-code A/B testing solutions that let you build and launch tests through a visual editor. You should be able to point, click, edit text, and launch without touching a single line of code.

Reading Your Results Like a Pro

After your test has run long enough to collect meaningful data, it's time to analyze. This is where a lot of people make mistakes. They see that Variation B is winning by 3% and immediately declare victory. But is that difference statistically significant? Or could it just be noise?

Most A/B testing platforms will calculate statistical significance for you, but you should understand what it means. A 95% confidence level means there's only a 5% chance that the difference you're seeing is due to random variation. That's the standard threshold for calling a winner. Anything below that, and you should keep the test running or call it inconclusive.

But statistical significance isn't the whole story. You also need to consider practical significance. If Variation B wins by 0.5% but requires a complete redesign of your page, is that worth it? Probably not. You're looking for wins that are both statistically solid and meaningful enough to justify implementation.

Sometimes you'll run tests that don't produce clear winners. That's okay. Inconclusive tests still teach you something. They tell you that element probably isn't the main driver of conversions, so you should look elsewhere. This process of elimination is valuable because it focuses your efforts on the things that actually matter.

The best testing platforms give you more than just "which variation won." They provide heatmaps, session recordings, and segmented data that help you understand why one variation performed better. This deeper insight is what transforms you from someone who runs tests into someone who truly understands their customers.

Turning Wins Into Lasting Growth

Here's the secret that separates companies that succeed with testing from those that don't: consistency. Running one test and calling it done won't move the needle. Running tests continuously, learning from each one, and building a culture of experimentation will transform your business.

When you find a winner, implement it and move on to the next test. Each win becomes the new baseline, and you test again to see if you can do even better. Over time, these incremental improvements compound into dramatic results. A company that improves conversion rates by 5% every quarter isn't growing linearly. They're growing exponentially.

The insights you gain from testing should inform everything else you do. If you discover that benefit-focused language outperforms feature descriptions, use that learning in your email campaigns, your ad copy, and your sales presentations. If you find that case studies build more trust than abstract testimonials, make case studies central to your marketing strategy. Testing doesn't just optimize pages. It optimizes your understanding of what actually persuades your customers.

Smart marketers document every test they run, tracking not just the results but the insights gained. Over time, you build a knowledge base that becomes one of your most valuable assets. You'll know with certainty what works for your audience, not what some marketing guru claims should work.

Common Pitfalls to Avoid

Even experienced marketers make mistakes when testing. One of the biggest is stopping tests too early. You see that Variation B is ahead after three days, and you declare victory. But conversion rates fluctuate, especially with small sample sizes. Stopping early dramatically increases your chances of implementing a "winner" that isn't actually better.

Another common mistake is testing too many things at once without enough traffic. If you're running a five-way test and getting 1,000 visitors per day, each variation only gets 200 visitors. That's not nearly enough to reach statistical significance unless your conversion rate lift is massive. Be realistic about what you can test given your traffic levels.

People also tend to ignore external factors. If you run a test during Black Friday and your conversion rate spikes, that's probably not because your new headline is brilliant. It's because buying intent is sky-high. Seasonal effects, marketing campaigns, press coverage, and other external factors can contaminate your results if you're not careful.

The solution? Run tests for full business cycles when possible, use proper statistical calculations, and maintain consistent traffic sources throughout the test. Professional A/B testing software helps prevent these mistakes by warning you when sample sizes are too small, flagging unusual traffic patterns, and ensuring you're making decisions based on solid data, not lucky flukes.

Getting Started Today

You don't need a massive budget or a huge audience to start testing. You just need a willingness to challenge your assumptions and let data guide your decisions. Pick one element on your most important landing page. Form a hypothesis about how you could improve it. Create a variation. Run the test for long enough to get real data. Analyze the results. Implement what you learn.

That's it. That's the process. And the tools to do this are more accessible than ever. Whether you're a solo entrepreneur or part of a larger marketing team, modern platforms make it possible to run professional-grade experiments without needing a technical background or a data science degree.

The companies winning in their markets aren't necessarily the ones with the biggest budgets or the most innovative products. They're the ones who understand their customers better and optimize relentlessly based on real behavior rather than opinions. Every test gets them closer to the version of their page that converts at the highest possible rate.

Your landing page right now is probably leaving money on the table. The question isn't whether you should start testing. It's whether you can afford not to. Because while you're debating it, your competitors are running experiments, learning what works, and pulling further ahead.

Take Action Now: Start Testing in the Next 10 Minutes

Here's your action plan. Right now, today, you can set up your first A/B test and start collecting data that will improve your conversions.

First, identify your highest-traffic landing page. That's where you'll get results fastest. Second, pick one element to test. If you're not sure where to start, test your headline. It has an outsized impact, and it's easy to create variations. Third, write two headline alternatives that are meaningfully different from each other and from your current headline.

Fourth, set up your A/B test using a proven platform that handles the technical heavy lifting for you. Fifth, let the test run for at least one week or until you reach statistical significance. Sixth, analyze the results, implement the winner, and plan your next test.

The difference between a 2% conversion rate and a 5% conversion rate might not sound dramatic, but it's the difference between barely surviving and thriving. It's the difference between constantly needing more traffic to hit your goals and growing sustainably with the audience you already have.

That's the power of systematic optimization. That's why A/B testing matters. And that's why thousands of marketers, founders, and growth teams trust abtesting.ai to run experiments that drive real revenue growth.

Stop leaving money on the table. Stop guessing what your customers want. Start testing today and let real data show you exactly how to maximize every visitor, every click, and every conversion opportunity you have. Your future self, looking at dramatically higher conversion rates three months from now, will thank you for taking action today.

Hundreds of marketers are already using our weekly newsletter to stay ahead of the competition. Get exclusive A/B testing insights, case studies, and proven strategies delivered to your inbox. Subscribe below.

Subscribe for Exclusive Tips & Updates. Enter Your Email Below!

Get the latest strategies to create, automate, and monetize with AI, content, and digital marketing straight to your inbox!

🔒 We respect your privacy. Your email is safe with us. Unsubscribe anytime.

Address

Sporerweg 16

94234 Viechtach, Germany

Contacts Us

(049) 170 499 6273

Subscribe to our newsletter

© 2026 Onlinebizoffers. All rights reserved.

Privacy Policy | Terms & Conditions | Returns & Refunds Policy | Affiliate Disclosure